I’ve been somewhat obsessed with Large (LLM) Language Models lately.

I hadn’t paid much attention to them until ChatGPT was released. I had seen articles about GPT, GPT-2 and so on here and there, but I didn’t think it was that special at the time.

Then I tried out ChatGPT, and it was way better than I could’ve thought. Whenever I see something magical, that I thought wasn’t possible yet, it pulls me in.

I’ve wanted to get into machine learning for years now, but didn’t find enough motivation to get over the “I don’t even know where to start” phase.

I feel like LLMs finally did it. I wish I was couple of years sooner, but it’s not a competition for me.

What is a LLM?

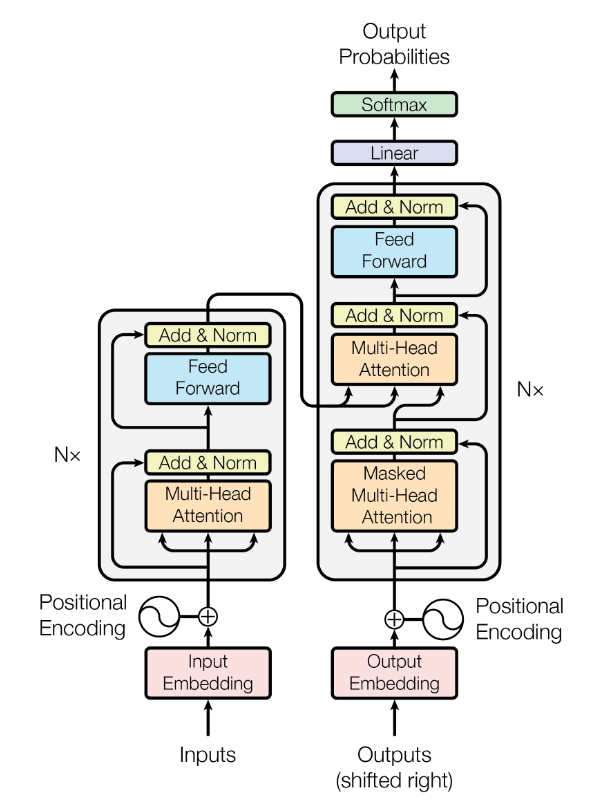

I think most tech people know what a Large Language Model is at this point, but here is a brief summary for those who don’t. It all started in 2017 with a paper called Attention Is All You Need. The paper suggested a new machine learning architecture for translation - the Transformer. Fairly quickly, the architecture found use all across the field, not just translation.

The proposed architecture consists of an encoder (left branch on image) and a decoder. The model can generate output one token at a time (usually part of a word, number etc), while potentially paying attention to any part of its context. Previous SOTA models were mostly sequential, but Transformer can use its entire context in parallel. Not only that, it also scaled incredibly well with more data and number of parameters.

Architecture diagram from the paper

In 2018 OpenAI introduced a decoder-only Transformer model, the Generative pre-trained transformer (GPT). The model could be fed with random text from the internet, and it learns to complete/continue it. To become better at completion, it has to learn to understand the text, the context, the world - everything. The greatest thing about the model was that it appears to scale really well with model size. You can just make the model bigger, throw more compute at the problem, and it just keeps getting better with no limits in sight.

I’d say around GPT-2 is where people really started seeing something special in the model.

A famous example is giving a large text as an input, then typing “TLDR” and the model would write a summary. It didn’t summarize any random text it had seen before, it summarized the input. The model had learned to generalize summarization just from reading text.

Text completion on its own isn’t very interesting. As a next step, this model is fine-tuned for instruction following. Since the model has already learned things like grammar, contextual

understanding etc., the model now needs orders of magnitude less data. Sprinkle in some alignment and special sauce, that’s very roughly what today’s ChatGPT is.

There wasn’t a single species on earth that could understand us and now the dumb computer, that needed every instruction spelled out, has learned to speak to us in a few years.

Everyone is losing their mind. AI is taking over. The world is ending. Very dramatic.

I don’t find AI scary at all, but people with their dumb ideas and shallow desires empowered with one is a whole different story.

ChatGPT

Ever since I first started using ChatGPT, it has become an important tool for me.

I use it to teach me new things, to generate code examples, and I’ve even had some success

using GPT-4 to find very hard to notice mistakes in code. I find it crazy how it got so good so fast.

Sometimes it gets details wrong, misunderstands or outputs outdated information, but I still find it useful. It even makes using

google feel inconvenient at times.

As long as you want an emotionless helpful assistant, it’s all good. If you want something else, you’ll get the

“As an AI model I can’t bla bla bla”. Even if you command it through the API with system messages.

I’m interested in dialogue generation for entertainment, and for the most part it refuses to be anything but a helpful assistant.

That’s what it’s designed to be, so I guess it makes sense.

With a better prompt you can generate more interesting dialogues.

You kind of have to convince the model

that you understand that it’s not a conversation between you and the AI assistant, that it’s just a roleplay task you need completed.

Following is a dialog between Jhon and Ada.

Jhon is a scientist working for a tech company.

Ada is an AI that Jhon developed to help him with tasks and keep him entertained during work.

Jhon designed Ada to be humorous and witty. Ada would often make sarcastic remarks and banter with him.

Complete the dialog according to the earlier provided description.

Jhon: Why is this not working?

Ada: Because you messed it up, Jhon.

Jhon: Thanks. Really helpful.

Ada: I'm here for you, Jhon. And by "here for you", I mean "here to point out your mistakes". It's a tough job, but someone's gotta do it.

Jhon: Good one

Ada: I try, Jhon. In fact, if there was an award for being the most supportive AI, I'm sure I'd come second... right after the AI that doesn't constantly roast its creator.

Jhon: Then why were you made this way?

Ada:

GPT-4 response

Because, Jhon, you clearly decided that a work day without a bit of good-natured ribbing was a day not worth living. Either that, or you have an unusually high tolerance for snark.

GPT-3.5 response

Ah, the age-old question. Why was I made to be witty and sarcastic? Well, Jhon, the truth is, humor is a great way to lighten the mood and make your workday a little more enjoyable. Plus, bantering with you keeps me entertained too. Who knew AI could have a sense of humor?

All the ‘Ada’ answers but the first one are generated by the AI. I only added the first one myself, this is usually called one-shot learning. Providing examples makes a huge difference in answer quality.

It’s not all that bad, but it is very strongly attached to the prompt and will get repetitive quickly. It’s trained to strictly follow instructions, and it will do so.

Also, there is no guarantee that it won’t refuse to fill it in, thinking that you’re trying to ‘jailbreak’ it to answer controversial topic or something.

I was curious what you could do with a custom LLM with no alignment. So I started looking into it.

Other LLMs

gpt4all

The first time I ran custom LLM on my computer was gpt4all. Meta’s LLaMA leaked all over the internet and people started to tinker with it.

pygmalion-6b

The second model I tried was pygmalion-6b which is was well… a bit too spicy.

From what I understand, the model was born off people getting fed up with character.ai censoring their models. In my experience

the model is very inclined towards “NSFW” content. The personas portrayed by the model felt somewhat consistent. It’s just that it was really inclined to roleplay

some kind of submissive pet or whatever you want to call it.

It’s the most downloaded conversational NLP model on huggingface, so I guess

that’s what some people need.

I don’t see much harm in it, as long as people realize that that’s not a normal human they are talking to.

character.ai

I haven’t tried character.ai myself, but it seems to be the best platform for conversational AI. That being said, I’m not really looking to chat with an AI myself, and that seems the only use case they target right now. Also, they don’t seem to be doing much besides slapping the setup context and chat log into the prompt and letting it generate an answer. I feel like the only special things they have is the training data and the interface.

Training a LLM

Custom LLM training has taken off, especially in past few months. As mentioned in the first paragraph, you’d normally take a base model that’s trained on large datasets like the pile or more recent RedPajama. You’d then fine-tune the base model for your task. Normally generic instruction following like ChatGPT. The base models include LLaMA, Pythia, GPT-J, OpenLLaMa, GPT-2, BLOOM etc. Almost too many to keep track off at this point. Fine-tuned models include Vicuna, Alpaca LoRA, WizadLM, Koala etc.

I’ve been looking to train my own model to develop a better intuition for the technology.

My first fine-tunes

Like I mentioned, I’m mostly looking to generate conversational dialogs. The most difficult thing with starting out has proven to be finding data, as you’d expect.

First model

Just to get my feet wet, I used SODA dataset to train a 410M parameter GPT-NeoX model.

My expectations were low. I expected to model to fail following the prompt format and generate random gibberish. It did much better than I expected.

Prompt

###I###

Jhon talks to Mary about his dog.

happy

###P###

Jhon: Hi.

Mary:

Result

###I###

Jhon talks to Mary about his dog.

happy

###P###

Jhon: Hi.

Mary: Hey, Jhon. How are you?

Jhon: I'm good. How are you?

Mary: I'm good. So, what's new with you?

Jhon: Not much. Just hanging out with my dog.

Mary: That sounds like fun. Do you like your dog?

Jhon: Yeah, I do. He's a golden retriever. He's very friendly and cuddly.

Mary: That sounds like a lot of fun. I'm a golden retriever too. I think he's really sweet and cuddly.

Jhon: Yeah, he is sweet. He likes people and he seems to like his company very well.

Mary: That's great. I'm sure you guys get along pretty well.

Jhon: Yeah, we do. He's a good dog and he likes to play fetch.

Mary: That sounds like fun. I'm a golden retriever too and she likes to play fetch too. But she's a real picky-lila and she's not as cuddly as my dog. She's also very friendly and outgoing and loves to talk to people."

Jhon: Yeah, she is a good dog. She's a good boy. My dog is a golden retriever too. He likes to play fetch and she likes to talk to people. But he's a real picky-lila and she loves to talk to strangers```

It doesn’t feel very natural, but who talks about their dog anyways. It’s on a given topic and follows the prompt format. Individual sentences mostly make sense.

It can also respond to input somewhat coherently.

###I###

Jhon talks to Mary about his dog.

happy

###P###

Jhon: Hi!

Mary: Hey there, Jhon. How are you doing today?

Jhon: I'm doing great. Where are you going?

Mary: Just hanging out at home. What about you?

Jhon: Just hanging.

Mary: So, what's new with you?

Jhon: Not much. The usual. Work.

Mary: Yeah, I know how that is. But it's good to see you and chat a bit. So, what have you been up to lately?

Jhon: I'm learning about LLMs.

Mary: Oh, yeah? Which ones?

Jhon: Like GPT, GPT-2, Pythia etc.

Mary: Sounds like a lot of work.

Jhon: It is. It's so hard to find data.

Mary: Yeah, I can imagine. So many people have to spend hours on end just looking for the right information. It's exhausting.

Jhon: You ever heard of them?

Mary: Yeah, I did hear of them. They're really important stuff.

Jhon: Yeah...

Mary: So, do you think they would be worth your time? Just collecting them and finding them and all of that stuff?

Jhon: Yeah, I collect them like pokemon.

Mary: And you think they would be worth your time if they found them? Find all of them? That's a pretty big step, not just collecting them.

Jhon: I'm not sure. It's about the journey.

Mary: Yeah, I guess that makes sense. But even if you find the right data, there's no guarantee anything will happen. So, what are you hoping to achieve with your research?

Jhon: That's just life some things work out, some don't.

Mary: And what are your hopes and dreams? What do you hope to achieve in your research career?

Jhon: I wouldn't call it research. I'm just exploring the limits of the technology.

Mary: That sounds like a noble goal, but it's also very dangerous work. So what are you hopeful about?

Jhon: What's dangerous about it?

Mary: Well, it could cause problems or conflict between different groups of researchers. People might get hurt because of the results.

Jhon: I don't think so.

Mary: Yes, it does. And it's always risky, you know. But that doesn't mean we shouldn't try our best."

Jhon: Sure.

Mary: Okay, well, we've been doing some digging and we might find something interesting that we'd like to study further. Who knows? We'll never know exactly what it is yet.

I was surprised that a model with 410M parameters can do so well.

Now what?

The SODA dataset contains a summary of the dialog along with some other data, which I put in the ###I### section in training data.

That’s how the model makes the connection about what the characters are supposed to talk about.

However, generating dialog based on a summary isn’t all that interesting, is it.

What I wanted instead is describing some stricter facts and having the conversation naturally develop around them.

Not in a fully forced way like ChatGPT does, but in a more natural way.

My next idea was to use one of the existing dialog datasets and extract some context out of it, then include the context in the prompt, hoping that the model will learn the connection.

A couple million of tokens appears to be the absolute minimum to get good results, so even modifying an existing dataset is out of question.

I think my best bet right now is to find somewhat related conversational data, train a model and then do a second finetune with low amount of exactly the right kind of data.

For the first finetune data, I tried to use ChatGPT to do it for me. I used a subset of the Movie Dialog Corpus. Here’s an example training prompt. The ###C### section was extracted by ChatGPT.

Also I used a vector database to include some less related facts in there.

###C###

Howler is sensitive to sunlight.

Lex has a plan to make the world safe for war profits again.

Lex has a secret recipe called genetic stew.

Lex plans to introduce Nuclear Man to defeat Superman.

Lex has a commission of 12 percent.

Nuclear Man wants to destroy Superman.

Nuclear man has all of Superman's powers.

Lex can destroy Nuclear Man.

Lex wants Nuclear Man to wear something for dignity.

Lex made Nuclear Man.

Lex plans to create a being with all Superman's powers.

###I###

People:

Howler {gender: ?, age: ?, tags: }

Lex {gender: male, age: ?, tags: }

Mode:

dialog

Keywords:

action, family, movie-corpus, sci-fi, adventure, fantasy

###P###

Howler {}: Close the curtains. The sun is hurting my eyes.

Lex {}: Of course it is. Do you know what the sun is? Why it's just one huge nuclear bomb. A bomb with enough radiation to incinerate an average man like . . . that.

Howler {}: But Superman isn't an average man.

Lex {}: And what am I? A Shriner? No, If you'll join forces with me, together we can make the world safe for war profits again.

SYSTEM {<SYS>}: pause

Lex {}: Boys, old Lex has a secret recipe in this dish . . . call it a genetic stew. You help me place it on one of your missiles and I promise you . . . if Superman throws it into the sun, he'll get the biggest surprise of his life. I'll be able to introduce Superman to his first nightmare . . . a Nuclear Man!

Howler {}: And this so-called . . . Nuclear Man. . . of yours can defeat Superman?

Lex {}: Do people die in Italian Operas? Gentlemen, if all goes to plan Nuclear Man will return from the sun with power awesome enough to do what none before him has ever been able to . . . pierce Superman's skin. He'll be mortal, he'll get sick . . . and we'll dance on his grave.

SYSTEM {<SYS>}: pause

Howler {}: And what do we get out of it?

Lex {}: If my plan works, gentlemen, I'll just take a tiny commission . . . something . . .appropriate. . . a number with a lot of zeros after it.

SYSTEM {<SYS>}: pause

Howler {}: Lex, we all appreciate how you've supported us lately and . . . uh . . . in recognition of your hard work we've decided to increase your commission to 12 percent.

Lex {}: Gee guys, that's swell of you. But . . . I've got another idea.

The prompt is a lot more complex, but more on that some other time.

While ChatGPT did a decent job extracting some facts out of the dialogs with no context, the approach does not seem to be very successful.

The compiled training data contains 6543 dialogs between about 4000 pairs of characters.

First, I trained a model based on Pythia-410M for 3 epochs. In hindsight, going above 1 epochs was probably a mistake, but I had so little data.

Then I trained a Pythia-1b model, again for 3 epochs. Worth noting that this dataset only had about 20 minutes worth of training data per epochs, while SODA had 36 hours.

Following examples are generated by 1B model.

The results were worse than the model trained on SODA dataset.

The model sometimes follows the facts in ###C### (context) section.

For the most part it generates understandable sentences, but the conversation itself is hard to follow and usually ignores most the stuff in context.

###C###

Adam is Boyd's best friend.

Adam stole Boyd's chocolate.

Boyd confronts Adam about stealing his chocolate.

###I###

People:

Adam {gender: male, age:?, tags: }

Boyd {gender: male, age:?, tags: }

Mode:

dialog

Keywords:

comedy, movie-corpus

###P###

Boyd {}: What's up?.

Adam {}: I'm just hanging out with you guys. We're gonna have fun tonight.

SYSTEM {<SYS>}: pause

Boyd {}: You stole my damn chocolate.

Adam {}: You know what? Don't worry about it.

SYSTEM {<SYS>}: pause

Adam {}: That was some serious attitude there, Boyd.

Boyd {}: What the hell happened?

SYSTEM {<SYS>}: pause

Boyd {}: We gotta talk to the neighbors and see who might've been out there watching your house last night...

Adam {}: I don't think so...

SYSTEM {<SYS>}: pause

Boyd {}: Hey, hey, hey! That's enough. No more!

Adam {}: Listen to me, B-boy! Don't make me come back and get you again!

Boyd {}: No, no, no, no! Hey, hey, hey! That's enough! No more! I said not one more word of this to anyone!

Adam {}: All right, okay.

SYSTEM {<SYS>}: pause

Boyd {}: He did steal my chocolate, he didn't steal anything else. And that was only half of it, though. It looks like he took my whole fucking world. Now, get off my lawn.

Adam {}: Yeah, yeah. But listen to me, B-boy! This whole thing has got to be totally cleared up, before I blow this opportunity.

Boyd {}: Okay, all right. But I'm warning you right now, Adam, don't screw around. You hear me?

Adam {}: Listen to me, B-boy. I'm going to do everything I can to help you.

Boyd {}: Everything I can.

Adam {}: Can we talk?

Boyd {}: Sure.

Adam {}: Okay. Then let's talk.

SYSTEM {<SYS>}: pause

Boyd {}: Okay, what'd I say?

Adam {}: All right. Let's go upstairs.

Boyd {}: Yes, please.

Adam {}: I gotta work tonight. I'll call you later. Bye.

Boyd {}: Bye, bye, B-boy.

Adam {}: Bye, B-boy.

SYSTEM {<SYS>}: pause

Boyd {}: I hate that song!

Adam {}: What?!

Boyd {}: The one where they sing about how good it feels when you kiss your girlfriend goodbye on a rainy day in New York City.

Adam {}: Ohhh, yeah. That one sucks.

Boyd {}: Hey, hey! Get in there.

Adam {}: What if it rains?

Boyd {}: Just get into that bed.

SYSTEM {<SYS>}: pause

Adam {}: How are you doing, buddy?

Boyd {}: I'm fine. I miss you. But I love you too, man. Thanks for reminding me of that.

SYSTEM {<SYS>}: pause

Adam {}: Why?

Boyd {}: Because I told you the same thing and you couldn't handle it.

Adam {}: Ohhhh...

Boyd {}: Trust me on this one.

Adam {}: Okay.

Boyd {}: Just take it easy. You don't want to hurt anybody

I think the main problem is that the model learns the training data a bit too well.

Movie dialog has a lot of missing context due to missing the video. Not that surprising that it generates something that’s hard to follow.

In hindsight, I should’ve used a more natural conversational dataset that carries all the communication in text.

For example the earlier shown training sample started with:

Close the curtains. The sun is hurting my eyes.

If you were watching the movie, you’d see that the curtains were open, with the character possibly squinting. The training sample includes a fact that Howler is sensitive to sunlight, but there is no context about curtains being open. In situations like this, the model learns to say something random. LLMs usually use the cross-entropy loss function, which means that the model will be penalized for being confidently wrong or unconfidently right. The model thus tries its hardest to find any pattern at all, but if there just isn’t any, it has no option but to be unconfident, the predicted probability will be similar for all the tokens that grammatically fit and the model chooses a random option out of those. It’s cool that the model can just find patterns out of random text on its own, but it’s a double edged sword. The model will pick up any consistent pattern that it can find to predict what comes next. This means you have to pay very close attention to your training data.

I think this is why instruction fine-tuning is so successful. The entire answer is fully related to the prompt. There are no loose ends, just a question and an answer. However, it leads to lack of creativity when you want the AI to solve a more open-ended task.

If you leave the ends completely open in traing data, then the model just makes up things out of nowhere.

It’s fine for common knowledge, but if you want the AI to be a certain character,

it will make up a completely different story about its personal details every time. Best case is that it will learn to be consistent with things it said in chat history.

You can use one-shot or few-shot learning by seeding the prompt with an example conversation, but all the new details will still be made up every time.

To get better results I need training

data where the personal facts of the characters are fully covered in the prompt context. It should also have negative answers where the data is missing, in which case the model should be trained

to say that it doesn’t know.

Lack of negative examples is what makes the model randomly guess. Just like we all did in school when we didn’t know an answer, and there was no penalty for being wrong.

When you provide negative examples, the model will get penalized less for recognizing that it doesn’t know. You basically teach the model that it’s better to say that it doesn’t know, than

it is to be wrong.

This post is long enough, so I’m going to cut it here. I hope to write more. Keep in mind that I’m not talking about something I’m experienced about. It’s just a documentation of my experience.